For the latest on ecommerce tips and best practices.

4 Common Ecommerce A/B Testing Mistakes (And How to Avoid Them)

Whether you’re exploring new ecommerce software or adding a trust badge to your site, one day you may need to A/B test your online store. A/B testing, also known as split testing, is a tool often used by businesses to improve the customer journey and increase conversions.

According to Invesp, 60% of companies believe A/B testing is highly valuable for conversion rate optimization. 63% of companies also believe it’s not difficult to implement A/B testing. While that may be true most times, you still need to be mindful of how you set up your test. There are plenty of ways you can fall into traps that will make your results less valuable, and in some cases you may get the wrong answer entirely.

We’ve been running tests for over a decade and have unfortunately seen some of our merchants fall victim to being data duped. Here we’ll go over the most common flaws we see in ecommerce A/B testing to prevent you from making important business decisions with inaccurate or misleading data.

4 Common Ecommerce A/B Test Mistakes to Avoid

- Not Excluding Internal Traffic

- Ignoring Abnormally Large Sessions or Transaction Counts

- Having Discrepancies in Transaction Count

- Making Impulse Decisions on Insufficient Data

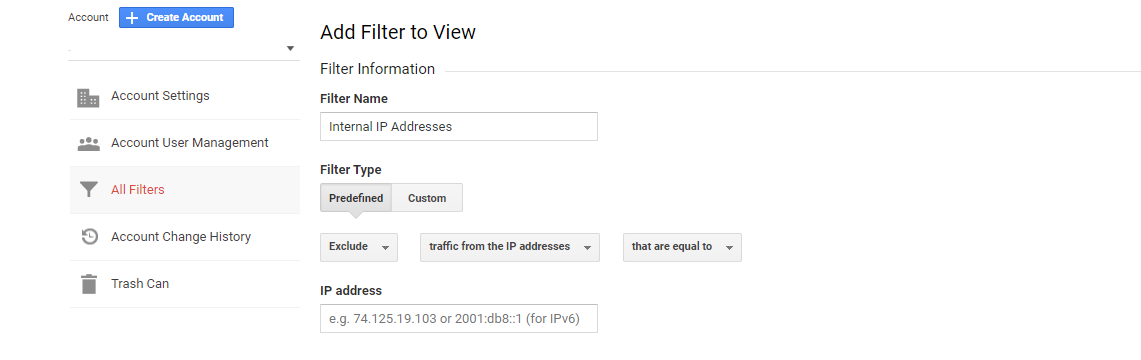

1. Not Excluding Internal Traffic

Now, with so many people working from home because of the pandemic, it’s a good idea to verify that your internal traffic is being filtered out of your web analytics platform. In Google Analytics, this can be accomplished by filtering out the IP addresses of:

- Business offices

- Remote work employees

- Retail locations

- B2B partners(if they’re frequent buyers)

See Google's step by step tutorial on how to filter out internal traffic in Google Analytics.

See Google's step by step tutorial on how to filter out internal traffic in Google Analytics.

2. Ignoring Abnormally Large Sessions or Transaction Counts

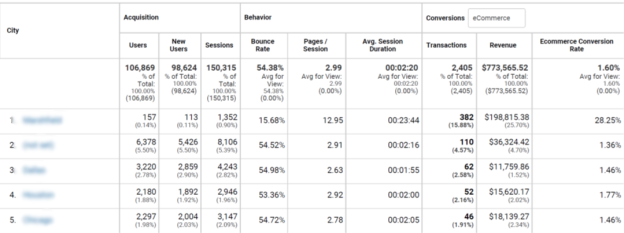

Another useful check is to look for outliers with abnormally large sessions or transaction counts, or revenue. We’ve seen this happen for our merchants, here’s an example:

This ecommerce store owner previously worked with a vendor who did not exclude their internal traffic in an A/B test. In a matter of seconds, we were able to QA check this by running the “City” report in Google Analytics. We noticed there was an abnormal amount of transactions and long average session duration in the same city as the headquarters of their company, which is the #1 city shown above. We discovered the company’s internal traffic and transactions were not being filtered out of Google Analytics, causing the results to be inflated.

Concentrations of sessions or transactions can also uncover other common issues. Do you partner with other businesses through your website? Business buyers behave differently than typical consumers. You should think about them differently, and A/B test them accordingly.

3. Having Discrepancies in Transaction Count

It is crucial to make sure your analytics tool is tracking transactions accurately when A/B testing your store. In our blog on ecommerce A/B testing best practices, we explained, “Duplicate transactions typically happen when users restore the order confirmation page from a closed browser session on their desktop or, most often, their mobile device.” They may come from a variety of sources, and can skew your data, leading you to believe you’ve generated more sales than you actually did. It's important to review your analytics data and remove internal transactions before calculating the results of your test. Here’s a guide on detecting and fixing duplicate transactions.

Duplicate transactions are not the only factor to look out for. Analytics platforms can easily under-report transactions as well. Note that causes can vary from simple coding mistakes to alternate payment paths that are missing the proper tracking code. It’s easy to miss these things as you’re caught up in the day-to-day of growing your business.

Revealing discrepancies in your transaction counts can be as simple as comparing the number of reported orders to the actual number of orders your business has fulfilled in the same timeframe. We’ve seen this simple comparison uncover duplicate or missing orders at rates of 20-30%, and even more. It is essential that your transaction count is not off in order to get an accurate A/B test.

4. Making Impulse Decisions on Insufficient Data

The biggest mistake we see businesses encounter is making quick decisions on insufficient data when A/B testing their website. In a world where everything is moving so rapidly, it's hard to not make decisions in a haste. Do not fall into the trap of making website changes based on early test data, it can give you misleading results. Be patient. You’re running an A/B test to improve your business. It’s important, and worth doing right.

Here are some best practices we use for our own A/B testing:

Run the A/B test for a minimum of two weeks:

The typical ecommerce sales cycles run 2-7 days. That means shoppers spend 2-7 days deciding what they want to buy from you. Shoppers also behave differently on weekdays vs weekends. Running the test for at least two weeks provides ample time to allow 2-4 buying cycles, giving you a good mix of customers.

Generate enough transactions for increased confidence in results:

Without getting into the technical details of statistical significance, you’ll test variations on your website by splitting your visitors into groups. A good rule of thumb for A/B testing conversion rate on a typical ecommerce website is to plan for at least 400 transactions in each group. We’ve found this to be the minimum starting point required to be confident in making decisions. It’s important to check your results with a significance calculator as some tests may require more transactions to get significant results.

-Nov-02-2023-06-37-28-0269-PM.png?width=6912&name=AB%20test%20example%20image%20(3)-Nov-02-2023-06-37-28-0269-PM.png)

(Image: 2-group A/B test compared to a 5-group A/B test)

For example, if you’re A/B testing the addition of a trust badge to your website, a common test to run is a 2-group test—your website with a trust badge vs your website without a trust badge. We’ve found that each group should have at least 400 transactions to have increased confidence in the results. However, if you're testing more than one variation, like your website without a trust badge, vs four different variations of a trust badge on your website, that’s a 5-group test. Each group still needs at least 400 transactions for the data to hold weight in your decision making.

How Do A/B Test Results Impact Decision Making?

Making your decision requires more than simply looking at which group won. It’s time for a little due diligence. Ask yourself whether the A/B test results are within your expectations from prior testing. Look at your results through the lens of your marketing campaigns to see if they’re consistent. Were you also running a large holiday campaign during the experiment? It's important to look out for other factors like sales and special events that could also have an impact on sales. A/B test results that are “too good to be true” are just as useful as “bad results” at spotting any of the common mistakes above.

Checking for these four common mistakes is fairly simple, but also essential for conducting an A/B test you can rely on. Since A/B tests are so crucial for decision making in ecommerce, at BuySafe we have an in-house Data Scientist to crunch the numbers and test the accuracy of results. When running an A/B test on a merchant’s website, we check for these common issues and many others to ensure the results show the most accurate data.

Conclusion

Here’s our most important piece of advice: Don’t just read your A/B test results, thoroughly analyze them. Although Google Analytics and other tools have made it easy to run an A/B test, it’s still just as easy to make costly mistakes. Your test results will require some critical thinking before you can use it to make decisions. Ask yourself: Do the numbers make sense? Do they agree with my core business metrics?

Getting accurate data is vital when A/B testing your ecommerce store. Using flawed data to make decisions for your store can be detrimental for your business. It’s like getting the wrong diagnoses for an ailment, then treating it with the wrong medicine. The actual problem does not get resolved and an additional issue can arise.

The same goes for your ecommerce website. When done properly, A/B testing is an excellent way to improve your ecommerce store and increase revenue. However, if the numbers seem too good to be true, investigate carefully before making any decisions based on the results.

Stay in the know

Subscribe to the buySAFE blog and receive the latest in ecommerce best practices.